A Practical Guide to Exploratory Data Analysis (EDA) Using Python

- shivamshinde92722

- Dec 15, 2022

- 7 min read

In this article, I will explain one of the most important parts of the lifecycle of a data science project, i.e., exploratory data analysis step by step with code.

We will use the Kaggle Spaceship Titanic dataset to demonstrate exploratory data analysis (EDA).

The first step in the machine learning project is to explore the data. Let’s start.

Importing some basic libraries

Reading the train data

To read the data, we will use the Pandas read_csv function. The read_csv function takes a path to the CSV file as a first argument.

Removing the unnecessary features (PassengerId and Name)

By our intuition, we can tell that the Outcome of the person’s survival is not dependent on his/her name or a passenger ID so, we will remove those two features from the data.

Finding the basic info about the data

Splitting the data into two parts: one for independent features and the other for dependent features

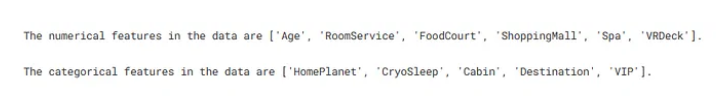

Getting the names of numerical and categorical features in the data

Transforming the ‘Cabin’ feature into three different features and then removing the original ‘Cabin’ feature

The ‘Cabin’ feature is made of three pieces of information: Cabin desk, Cabin number, and Cabin side. We will remove the forward slash and separate these three pieces of info and will create three new features containing those three parts of the Cabin feature. Also, after creating three new features, we will remove the original Cabin feature.

Checking the names of new numerical and categorical features after Cabin feature transformation

Some new features are created and some older features are removed. So, let’s find the names of new categorical and numerical features again after transformation.

Note that this method to find the numerical features will give improper results if we have a feature with a boolean data type. Since we do not have such a feature in our dataset, we can safely use this method.

Checking if there are any features with zero variance

Since the higher the variance of the data, the higher its contribution to the prediction of the dependent feature, we will remove any feature that does not contribute to the prediction i.e., the feature with zero variance.

This won’t give any output i.e., the data don’t have any numerical features with zero variance.

This code block also won’t give any output i.e., the data don’t have any categorical feature with zero variance.

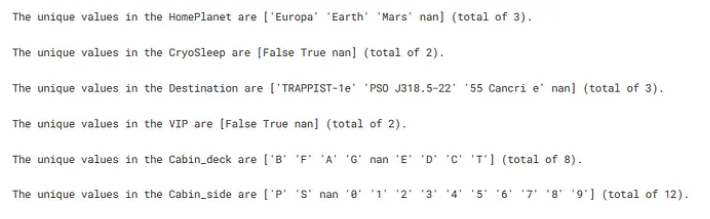

Checking the unique values in the categorical features

Checking the unique values in the categorical feature data and their count. If any of the categorical features have a very high number of unique values then we will remove that column. This is because such columns are more likely not to contribute much to our model training.

Here we don’t seem to have a categorical feature with a very high number of unique values.

Checking the correlation between independent features and the dependent features

Let’s check the correlation between the features of the data. This will tell us many things. Some of these are:

The correlation between independent features and the dependent feature. If any of the independent features have a very low correlation with our dependent feature, then that independent feature will contribute very less to our model training. So, we can remove that feature. This will allow us to keep only the relevant features for our model training and this in turn will reduce the overfitting of the model on the data.

The correlation between independent features. This will tell us which independent features are highly correlated. Let’s say we have two highly correlated features so in such a case, we can easily remove one of them from our data. This will make our model more robust and also prevent overfitting.

Finding a correlation between numerical independent features and the dependent feature.

Here we didn’t find any two numerical independent features which are highly correlated with each other.

Finding a correlation between categorical independent features and the dependent feature.

Here also we didn’t find any two categorical features which are highly correlated. Note that here we are performing an encoding on the categorical features for the correlation function to work.

Checking out the presence of missing values in our data

Let’s visualize the missing values for fun.

In the above diagram, missing values are shown with the white colored lines in each of the feature of data.

The data seems to have a very low amount of missing values (approx. 2% of the whole data size).

Handling missing values is one of the most important tasks in the data preprocessing step. Removing or imputing is done very frequently to deal with missing data. But it is not always the best option to deal with missing data. In some cases, the missing data might be due to some specific condition while gathering the data or there might be a case where the data is missing in some specific pattern.

To make this clear, let’s take an example. Suppose we are doing a survey of college students. One of the questions in the survey asks for the weight of a person. Then, in this case, some girl students might be hesitant to put down their weight in the survey (This is due to the notion that college girls are more aware of their weight than boys. I don’t know if it is true but, let’s assume it for the sake of our example). So, we will get a lot of missing values for age in the case of girls. This is an example of missing values due to some pattern.

Let’s consider another case of our survey. Suppose another question in our survey is about muscle strength. For this type of question, a lot of college boys might be hesitant to put down their response (This is again due to a similar notion that college boys are quite aware of their physique or strength). So, we will get a high number of missing values in the case of boys.

We need to make a decision on how to handle the missing values based on criteria similar to the ones mentioned above. One way to keep the pattern of missing values while replacing the missing values is to use Scikit-Learn’s SimpleImputer with its argument named add_indicator.

Checking the presence of the outliers in the data using the distribution plots and boxplots

Using distribution plots

We can confirm the presence of outliers in the data if the distribution of features in the data is skewed.

2. Using boxplots

The boxplot is a more intuitive way to visualize the outliers in our data.

If value > (quartile3 value + constant * interquartile-range) ==> value is outlier

If value < (quartile1 value — constant * interquartile range) ==> value is outlier

Usually, the constant value used is 1.5. In the boxplot, outliers are represented by hollow circles most of the time.

Note that this distribution is before dealing with the missing values in the data. The distribution might change if we impute the missing value. So it is good practice to check the distribution of the data after dealing with the missing values in the data.

For the problem at hand, we should analyze the outlier before removing it completely from our data since sometimes the presence of the outlier may help us in our purpose. For example, if we are doing a credit card fraud detection, then in such case we are looking for outliers in our data to detect fraud. So, it does not make sense to remove outliers. But on the other hand, outliers can also make our model less accurate. The following figure tells how one should go about analyzing outliers for a problem.

Our purpose here is to predict whether a passenger survived or not given his info such as his age, how much did he spend on room service and etc. So, for our problem outliers would be the people who are very old or very young, people who spent a very high or very low amount on room service, food court, shopping mall, spa, VRDeck and etc.

Let’s go by the above diagram to analyze the outliers. In our problem statement, outliers such as data of people with very high age cannot be considered as a result of any data entry. Also, these outliers will most probably affect our model since these outliers amount to a very small part of our whole data and hence cannot be said to represent our whole data. So, it is wise to remove outliers from our data.

Checking the multi-collinearity between the independent features using pairplot and using VIF value

In our analysis, multi-collinearity occurs when two or more independent features are highly correlated with each other, such that they do not provide unique information to our machine learning model training process. We can check this using three methods: using a correlation plot (just like the one we created above), using pairplot, and using VIF value. Out of these three, VIF is a sure way to check for multi-collinearity.

Here we will check the multi-collinearity for numerical features.

Using pairplot

We can be sure of multi-collinearity between two features if their plot is showing some kind of pattern.

We don’t see much of a pattern between the numerical features in our data.

2. Using VIF value

Interpreting the VIF values:

VIF = 1: There is no correlation between a given predictor variable and any other predictor variables in the model.

VIF between 1 and 5: There is a moderate correlation between a given predictor variable and other predictor variables in the model.

VIF > 5: There is a severe correlation between a given predictor variable and other predictor variables in the model.

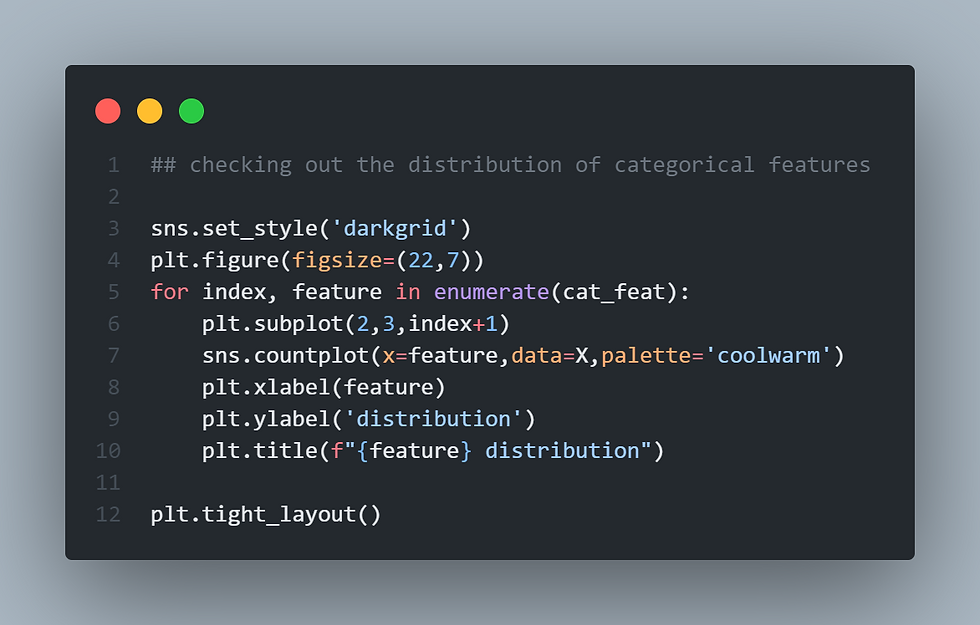

Checking out the distribution of categorical features

Checking if the dataset is imbalanced or not

A dataset is called imbalanced if the number of data points for each of the unique values in the dependent feature differs by a high number. An imbalanced dataset creates problems while splitting the dataset into training and validation data. And the machine learning model trained on such data won’t generalize well on the new data.

Here we can see that the count of ‘True’ and ‘False’ does not differ by a large amount. So we can conclude that our data is not imbalanced.

This is a very basic explanation of how usually an exploratory data analysis (EDA) is done. There might be many other steps involved in the EDA, but those come from practice. Also, there are many other ways to create different types of interesting and intuitive graphs to visualize the data, outliers, missing values, and much more. Exploring such things is something I would highly recommend. To find many such interesting ideas, Kaggle is a great place.

Resources

One of the EDA step was performed to find out the features with zero variance. To know how the variance is related to the amount of information a feature holds, check out the following article by Casey Cheng.

Know more about boxplots on Wikipedia.

The code used in this article is borrowed from my Kaggle notebook. Check out the whole code using the following link.

I hope you like the article. If you have any thoughts on the article then please let me know. Any constructive feedback is highly appreciated.

Have a great day!

Comments